What Is Deep Narrative Analysis Trying to Accomplish?

Deep Narrative Analysis' (DNA's) long-term goals will be achieved by research and experimentation, taking small steps and refining our designs. Our ultimate goal is to create a tool that compares narratives and news articles, and indicates where they align and diverge, what events are mentioned (or omitted), and what words are used. This, then, can be used to understand if a news article is biased, or if there are certain themes that are consistent across a set of narratives and articles. Regarding themes in narratives, DNA can be used to find evidence regarding what combination of circumstances, actions and events are correlated with an individual's success or failure in a situation, such as overcoming addiction.

To achieve this long-term goal, we need to transform the text of a news article or narrative into a semantically-rich, machine-processable format. Our choice for that format is a knowledge graph. The transformation is accomplished using syntactic and semantic processing to incrementally parse the text and then output the results as an RDF encoding (examples are included below).

The sentences of the text are broken down into chunks (subject-verb-object components), then phrases (semantically related words), and lastly individual words and concepts. This is accomplished using spaCy's part-of-speech, dependency parsing and named entity recognition tooling. In addition, various rules and heuristics are applied that utilize idiom processing and knowledge from the domain (and from previous sentences) to determine the "most likely" semantics, or to indicate where human insight will be needed.

Due to the complexity and ambiguity of natural language, DNA assumes that human intervention and correction of the knowledge graph will be needed. We want to create a "good" parse, identifying the most important components of the sentence and then allow edit, while hiding the fact that there is a knowledge graph under the covers. (We are working on an interface to do that, and within the next few months, will post the code in our GitHub repository and discuss it on this blog.)

Let's look at an example parse from Erika Eckstut's life story (page 1 of Echoes of Memory, Vol 1 from the United States Holocaust Memorial Museum website). The first paragraph reads as follows:

Erika (Neuman) Eckstut was born on June 12, 1928, in Znojmo, a town in the Moravian region of Czechoslovakia with a Jewish community dating back to the thirteenth century. Her father was a respected attorney and an ardent Zionist who hoped to emigrate with his family to Palestine. In 1931, the Neumans moved to Stanesti, a town in the Romanian province of Bukovina, where Erika’s paternal grandparents lived.

The results of the processing create this RDF segment (written using the Turtle syntax) for the last sentence in the paragraph:

:PiT1931 a :PointInTime ; rdfs:label "1931" .:PiT1931 :year 1931 .:Event_814b0ade-d409 :text "In 1931, family moved to Stanesti, a town in the Romanian province ..."; :sentence_offset 3 .:Event_814b0ade-d409 :has_time :PiT1931 .:family a :Person, :Collection ; rdfs:label "family" .:Stanesti a :PopulatedPlace, :AdministrativeDivision ; rdfs:label "Stanesti" .:Stanesti :admin_level 2 .:Stanesti :country_name "Romania" .geo:798549 :has_component :Stanesti .:Event_814b0ade-d409 :has_destination :Stanesti .:Event_814b0ade-d409 a :MovementTravelAndTransportation .:Event_814b0ade-d409 :has_origin :Znojmo .:Event_814b0ade-d409 :has_active_agent :family .:Event_814b0ade-d409 rdfs:label "family moved" .:Event_814b0ade-d409 :sentiment 0.0 .

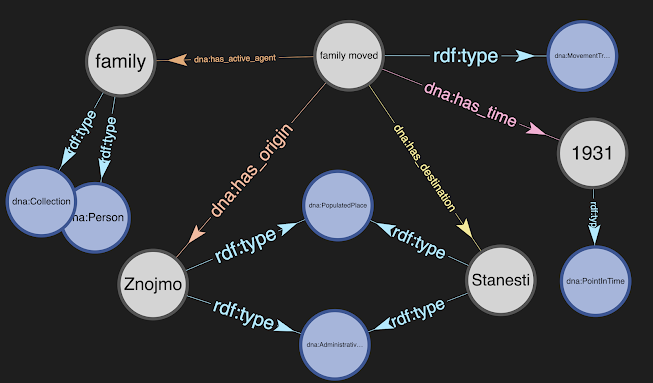

Using Stardog Studio to visualize this set of triples produces the following image:

In this image, classes are shown in light blue, while instances are shown in grey. The classes are defined in the DNA ontology.

Reviewing the triples, a few things can be observed:

- The sentence is reduced to its main subject-verb-object and summarized using the label, "family moved"

- The details of the movement event are related via predicates (who = has_active_agent, when = has_time, where = has_destination)

- A movement event to some destination location implies that there is an origination point (in this case, DNA identified Znojmo as the last known location)

- Details about Stanesti have been added using GeoNames data (illustrating that additional information can be obtained from online and domain-specific sources and used to supplement the text of the narrative or as explanation for the user)

Here is another excerpt from that same narrative, "In 1937, however, members of the fascist Iron Guard tried to remove Erika’s father from his position as the chief civil official in Stanesti. Eventually, a court cleared him of the fabricated charges and he was restored to his post." The corresponding knowledge graph for the second sentence of the excerpt is:

:Event_87a5e606-f200 :text "Eventually a court cleared him of the fabricated charges." ;

:sentence_offset 9 .

:Event_87a5e606-f200 :has_earliest_beginning :PiT1937 .

:court_c40d7fdf-169e a :GovernmentOrganization ; rdfs:label "court" .

:fabricated_charges_2272b371-3912 a :LegalEvent, :Collection .

:fabricated_charges_2272b371-3912 rdfs:label "fabricated charges" .

:Event_87a5e606-f200 :has_topic :fabricated_charges_2272b371-3912 .

:Event_87a5e606-f200 a :RemovalAndRestriction .

:Event_87a5e606-f200 :has_active_agent :court_c40d7fdf-169e .

:Event_87a5e606-f200 :has_affected_agent :father .

:Event_87a5e606-f200 :has_location :Stanesti .

:Event_87a5e606-f200 rdfs:label "court cleared father of fabricated charges" .

:Event_87a5e606-f200 :sentiment 0.0 .

:Event_bb94b5d6-e8e7 :text "he was restored to his post." ; :sentence_offset 10 .

:Event_bb94b5d6-e8e7 :has_earliest_beginning :PiT1937 .

:post_2905a43e-b249 a :Employment ; rdfs:label "post" .

:Event_bb94b5d6-e8e7 :has_topic :post_2905a43e-b249 .

:Event_bb94b5d6-e8e7 a :ReturnRecoveryAndRelease .

:Event_bb94b5d6-e8e7 :has_affected_agent :father .

:Event_bb94b5d6-e8e7 :has_location :Stanesti .

:Event_bb94b5d6-e8e7 rdfs:label "father restored to post" .

:Event_bb94b5d6-e8e7 :sentiment 0.0 .

And the visualization of the excerpt (including expansion of the details related to the narrator's father, captured earlier in the parse) are shown in this second image:

What are some items of note from the above triples and image?

- The pronouns (co-references), "him" and "he", are resolved by DNA to the narrator's father

- Note that the father was specifically mentioned in the first sentence of the excerpt, as the one being removed from his position

- Using the knowledge graph, the father's details can be retrieved and are displayed in the image, even though they did not occur in the sentence (or even in the same paragraph)

- The concept of the father's "position" (from which he is removed) and "post" (to which he is restored) are identified using IRIs with UUID suffixes (specifically, the IRIs are dna:position_af9c611e-ba8d and dna:post_2905a43e-b249)

- UUIDs are appended to create unique identifiers, since other positions/posts may be discussed in the narrative

- Both instances are defined to be of rdf:type dna:Employment, and in reality reference the same "employment"

- In a future DNA update, this type of coreference will also be resolved and the "position" and "post" references will be identified by the same IRI

- The timing when the court cleared the narrator's father of the legal charges is defined as "has_earliest_beginning" of 1937 (which is determined from the time of the previous sentence/event and the word, "eventually")

- Given that the domain being examined is the Holocaust, "charges" are overridden in the idiom dictionary as related to a LegalEvent, as opposed to a shopping/purchasing event

To summarize, the NLP processing in DNA executes as follows:

- Sentences are split into “chunks” based on analyzing conjunctions and the overall structure

- A sentence is split IF the individual ”chunks” have their own subject and verb

- spaCy’s part of speech, dependency parsing, named entity recognition and pattern matching components are used to:

- Obtain meta information about the narrator (such as family members, date of birth, etc.)

- Capture subjects, verbs, objects and prepositional details from each sentence

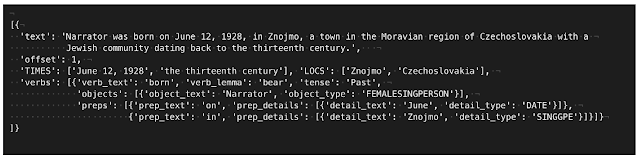

- A Python dictionary is created for each sentence - holding the time, location, subjects/objects and verb details

- An example of the dictionary from the first sentence of Erika Eckstut's narrative is shown in the image, below

- For each dictionary in the array, the sentence details are analyzed to:

- Define an event or state based on the semantics of the root and auxiliary verbs, also utilizing the verbs' clausal complements, adjectival complements and phrasal verb particles

- As an example of this type of analysis, consider the sentence, "mobs carried out bloody attacks", where the root verb's lemma is "carry", with the phrasal particle "out"

- These semantics (executing an attack) are very different than the semantics of the word, "carry", taken in isolation

- The verb semantics are mapped to the DNA ontology to standardize and normalize the output

- The current mapping is based on matching words, synonyms and definitions to the ontology concepts, along with idiomatic processing

- If no mapping is found, the verb is identified as a generic dna:EventAndCondition, which triggers subsequent human review

- Determine the most relevant time and location from the sentence or based on the details from previous sentences

- Capture of the semantic details of the nouns (subjects, objects and prepositional objects)

- This is similar to the verb mapping discussed above

- If no mapping can be established, the noun is identified as a generic owl:Thing, and tagged for human review

The success of this approach is encouraging and has demonstrated that detailed semantic understanding and knowledge graph encoding are possible. But, it has also highlighted where improvements are needed. For example, the current idiomatic processing is too labor intensive. It was done to establish the basic processing logic and understand the required functionality. Now, it is being evolved to better exploit WordNet, VerbNet and other lexical sources, as well as considering different machine learning alternatives.

Stay tuned for future iterations!

Comments

Post a Comment